Yesterday (August 2, 2025), I had the opportunity to attend the Agentic AI Summit at UC Berkeley. The event started at 9 a.m., but I had a morning run planned with friends, so I wasn’t able to arrive until around 1 p.m.

Despite missing the morning sessions, I attended two main talks that really stood out:

- Foundations of Agents

- Next Generation of Enterprise Agents

Foundations of Agents

The first talk I attended was part of the Foundations of Agents session, given by Dr. Dawn Song, Professor at UC Berkeley. Her talk, titled “Towards Building Safe and Secure Agentic AI,” explored the dual-edged nature of AI technology.

Who will benefit most from AI: the good guys or the bad guys?

That question stuck with me. Dr. Song highlighted how AI can both protect and attack systems. The talk emphasized the critical need to secure agents, especially in terms of privacy and vulnerability. She introduced GuardAgent: Safeguard LLM Agents via Knowledge-Enabled Reasoning, a research project aimed at evaluating and strengthening AI agent security. The explanation of how GuardAgent works gave me insight into how researchers are building AI to be more robust and safe.

The next talk was by Ed Chi from Google, titled “Google Gemini Era: Bringing AI to Universal Assistant and the Real World.” He introduced Project Astra, a Google initiative focused on developing truly universal AI assistants.

During his talk, he asked the audience, “How many of you have a personal assistant?” Only a few hands went up. He then said, “Imagine if we all had one. With Project Astra, that’s possible.”

He shared impressive demo videos showcasing the power and responsiveness of AI agents. Though his talk was short, it was one of the most compelling presentations of the day. You can check out one of the videos here:

The third talk, “Automating Discovery” by Jakub Pachocki, Chief Scientist at OpenAI, was delivered remotely via Zoom. Unfortunately, due to poor audio quality and connection issues, I wasn’t able to follow much of it and decided to skip it.

The last talk of the session was by Sergey Levine, who discussed Multi-Turn Reinforcement Learning for LLM Agents.

Here’s a simplified breakdown:

- Multi-Turn: In real-world scenarios, decisions are not made in isolation, they unfold over multiple steps. Agents need to plan, iterate, and adjust based on feedback.

- Reinforcement Learning (RL): Teaching AI through rewards and penalties, enabling it to learn optimal actions based on past experiences.

Sergey explained how agents can become more efficient by “remembering” past decisions and using them to improve future outcomes, reducing resource use while improving decision-making. It was a technical but deeply insightful talk.

Next Generation of Enterprise Agents

After a short break, we moved into the Next Generation of Enterprise Agents session, which featured speakers:

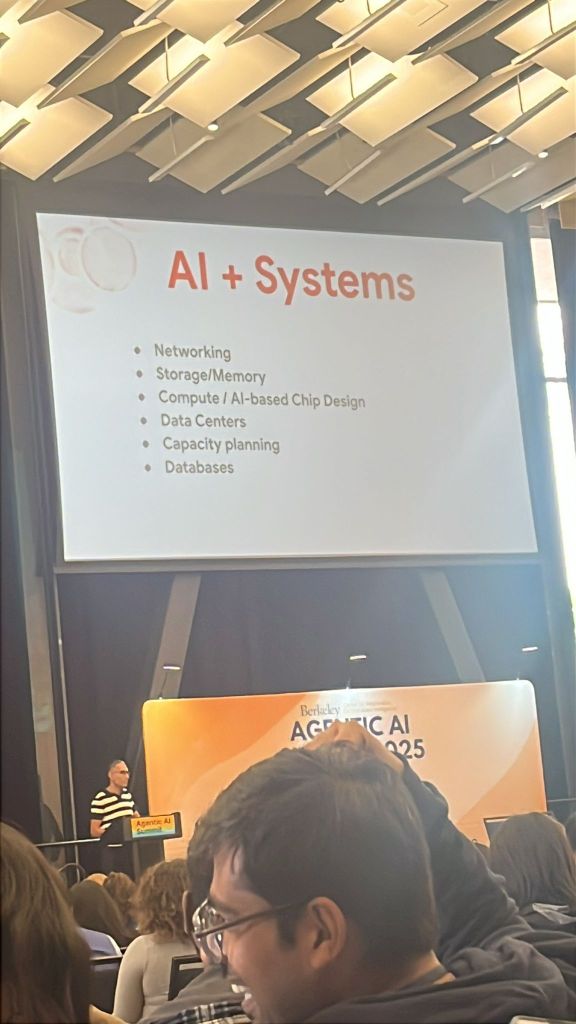

- Burak Gokturk – VP, ML, Systems & Cloud AI Research, Google

- Arvind Jain – Founder/CEO, Glean

- May Habib – Co-Founder/CEO, Writer

- Richard Socher – Founder/CEO, You.com

To be honest, this portion felt more like a sales pitch than a research discussion. Each speaker highlighted how their AI solutions are boosting productivity and driving profits for enterprise customers. While interesting, it lacked the critical lens and depth of the earlier technical talks.

Final Thoughts: With Great Power…

One moment from the panel discussion really stuck with me. When asked about the future of AI, Ed Chi responded:

“Uncertainty causes anxiety. As humans, we want to know what’s going to happen. When we don’t know, we feel anxious. That’s exactly what’s happening with AI today.”

That quote lingered with me as I walked to my car. I told my brother I felt both excited and uneasy about what lies ahead. Dr. Song’s question echoed in my mind: What if AI empowers the wrong people? How do we ensure it’s used for good?

While there are no simple answers, I believe one critical factor is data quality. AI systems are only as good as the data we feed them. As a data engineer, I see our role as essential in guiding AI systems toward ethical and effective decision-making.

We are the ones who curate, clean, and manage the data AI learns from. It’s our responsibility to ensure that this data is accurate, fair, and useful—not just for internal company use but for the broader systems that may rely on it.

Final Thoughts: With Great Power…

One moment from the panel discussion really stuck with me. When asked about the future of AI, Ed Chi responded:

“Uncertainty causes anxiety. As humans, we want to know what’s going to happen. When we don’t know, we feel anxious. That’s exactly what’s happening with AI today.”

That quote lingered with me as I walked to my car. I told my brother I felt both excited and uneasy about what lies ahead. Dr. Song’s question echoed in my mind: What if AI empowers the wrong people? How do we ensure it’s used for good?

While there are no simple answers, I believe one critical factor is data quality. AI systems are only as good as the data we feed them. As a data engineer, I see our role as essential in guiding AI systems toward ethical and effective decision-making.

We are the ones who curate, clean, and manage the data AI learns from. It’s our responsibility to ensure that this data is accurate, fair, and useful, not just for internal company use but for the broader systems that may rely on it.

One of my favorite quotes comes from Spider-Man: “With great power comes great responsibility.“

While AI agents might appear to hold the power, I believe data professionals are the real power-holders. If we provide quality, thoughtful, and well-structured data, then AI agents stand a better chance of acting responsibly and effectively.

To my fellow data engineers: With great power comes great responsibility.

Let’s use that power to help build an AI-powered future that we can all be proud of.